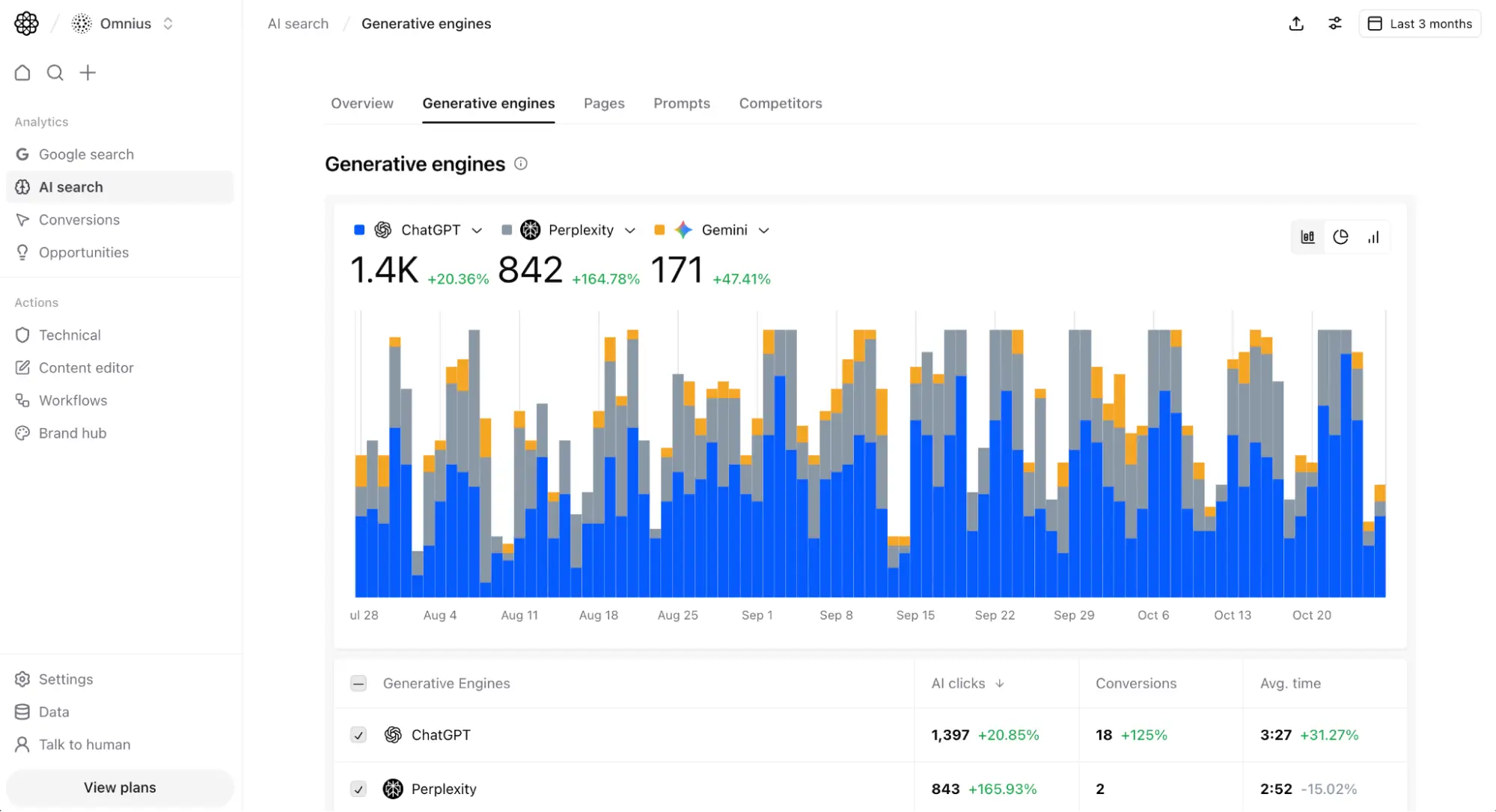

The Click Source Analysis view breaks down how different AI search engines drive visibility and interaction with your content. Each engine contributes distinct behavioral patterns depending on how users query, consume, and follow generated answers. Atomic’s analysis captures this variation using evidence-based data drawn from server and client-side tracking.

By displaying verified AI clicks, conversion events, and average on-site time per engine, the module transforms what has been directional in the market into measurable evidence. Teams can use this information to identify which engines are generating genuine user traffic and which are referencing brand content without driving sessions.

AI search engines operate differently from traditional search systems. Instead of returning lists of ranked links, they generate answers that may cite sources, summarize, or link contextually. Atomic’s data model detects and logs these interactions through a two-layer framework:

The data is continuously validated through Atomic’s hybrid evidence + synthetic pipeline, ensuring each event is categorized by confidence level. Evidence clicks represent verifiable traffic; modeled clicks reflect directional presence. This hybridization produces the most accurate view of AI-assisted discovery available today.

Every metric shown in the AI Search Click Source Analysis is derived from normalized, timestamped data sources:

All data undergoes reconciliation between GA4, Atomic detectors, and engine-specific visibility reports. The validation pipeline removes duplicate sessions, normalizes temporal differences, and flags anomalies caused by model updates or API inconsistencies.

This process produces reliable, reproducible metrics that distinguish verifiable clicks from mere citations.

Generative search traffic is growing quickly yet remains opaque in traditional analytics. In 2025, over 60% of English-language queries are projected to surface some form of AI-generated result. However, less than a quarter of these yield measurable user clicks. Without source-level analysis, teams cannot distinguish visibility from engagement or prioritize which AI ecosystems are worth optimizing for.

The Click Source module closes that gap. It quantifies which AI engines deliver true traffic, how engaged those visitors are, and how this performance evolves over time. For example, a brand might observe that ChatGPT generates most mentions but Perplexity provides higher conversion rates due to its open-link policy.

Each row in the Generative Engines table represents one traffic source.

Tracking engine-level differences helps teams understand audience intent and content suitability across models.

Teams use this module to:

For example, if Perplexity shows a 165% increase in clicks but a decline in session time, Atomic highlights that anomaly, allowing teams to trace it back to prompt context or on-page experience issues.

AI Search Click Source Analysis connects with other Atomic modules to create a continuous feedback loop:

This interoperability transforms isolated metrics into search intelligence—a unified picture of performance across multi-engine ecosystems.

By tracking how each AI engine interacts with your content, Atomic enables data-driven decisions about optimization priorities. Teams can allocate technical and content effort where it drives measurable engagement, not just theoretical mentions. This precision ensures visibility strategies evolve with user behavior across the rapidly diversifying landscape of generative search.

AI clicks represent verified user sessions that originate from AI search engines. They are captured via server and analytics evidence, not modeled estimates.

Atomic currently supports ChatGPT, Perplexity, Gemini, Claude, Copilot, DeepSeek, Poe, and iAsk, with continuous expansion as new engines emerge.

Evidence data updates daily, while modeled visibility updates continuously as new detections occur.

Engines differ in how they present citations. Some open links in secondary views, reducing conversion events even when visibility is high.

Engagement time varies by query intent and engine design. For instance, longer sessions from Gemini often reflect exploratory research behavior.

Yes. The module stores time-series data for each engine, allowing historical comparison by click volume, conversion rate, and engagement duration.

Modeled metrics carry confidence labels. Evidence clicks are verified via analytics, while modeled clicks estimate non-click visibility based on recurring mentions.

Integrate your data sources with Atomic in as little as 4 minutes.

No coding, no complex setup, and no heavy learning curve.

Your data is visible only to you. Our system is completely encrypted.